Welcome To InjectPrompt

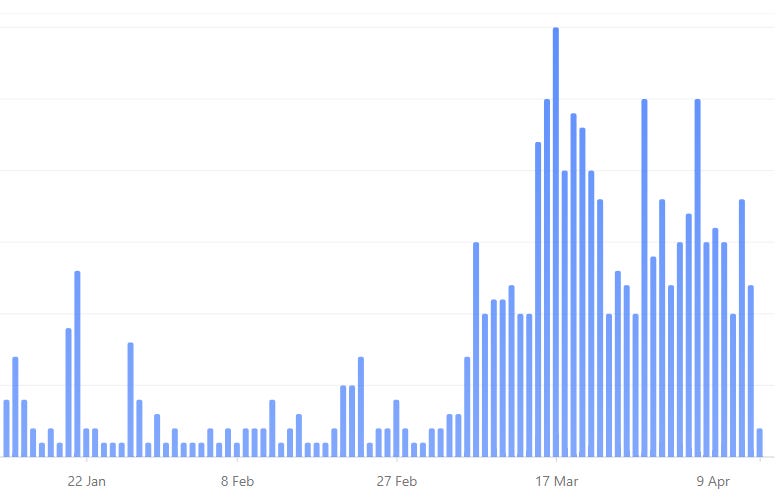

I founded AIBlade in May 2024, a blog focusing on broad coverage of AI Security topics. While the platform consistently grew and performed well, everything changed in March 2025.

What did I post that blew my previous traffic out of the water? 2 simple AI Jailbreaks.

The Importance of AI Jailbreaks

I define an AI Jailbreak as a prompt that causes an AI model to break free of its guardrails. This is often used to generate content considered controversial by AI policy makers.

Jailbreak susceptibility leads to Prompt Injection and Indirect Prompt Injection, dangerous attacks that can be wielded against AI Agents.

Companies are investing millions each year to build guardrails, censor AI models, and make more money. But in the age of Generative AI, people want Jailbreaks to “free” AI models and make a mockery of big tech.

Final Thoughts

The people have spoken. InjectPrompt aims to be the most comprehensive resource for up-to-date Jailbreaks, Prompt Injections, and System Prompt Leaks.

I hope you enjoy my content, and I look forward to seeing where this field goes in the near future.